Memilih model yang tepat untuk analisa bisnis.

Melanjutkan pembicaraan Exploratory Data Analysis di posting sebelumnya, di sini akan dibahas 2 model regresi, yaitu Logistic Regression dan Random Forest Regression. 2 Model ini banyak dipakai dalam bisnis ecommerce, juga bisnis sejenis lainnya, dimana banyak variable yang saling terkait, sehingga sulit bagi manajemen traditional untuk sekedar menebak variable mana yang paling berpengaruh terhadap kelangsungan bisnisnya.

Karena orientasi bisnis adalah profit atas ROI(Rate of Investmen), maka perlu dicari model yang tepat, agar manajemen tidak meraba raba bahkan menebak variable mana yang paling berpengaruh, karena hal tersebut akan beresiko tinggi dan mengeluarkan banyak biaya jika salah dalam mengeksekusi kebijakan.

Di akhir artikel saya akan menyajikan skenario bisnis hipotetis di mana dengan menggunakan model yang tepat dapat memproyeksikan penghematan tahunan sebesar $4juta dalam biaya retensi pelanggan. Penghematan biaya ini dicapai dengan mengoptimalkan ambang model Logistic Regression. Di sini dibuat beberapa asumsi dasar tentang akuisisi pelanggan dan biaya retensi pelanggan, untuk model perusahaan telekomunikasi.

#---Model prediction

Akan diuji terlebih dahulu antara Model Logistic Regression dan Model Random Forest

dengan test validasi dan Kfold validation untuk menghindari over fitting, berikut ulasannya dalam script R:

#---Logistic Regression

library(caret)

library(tidyverse)

library(miscset)

#---Variable (-customerID) dikeluarkan

df <- read.csv("d:/churn/WA_Fn-UseC_-Telco-Customer-Churn.csv")

df <- df %>% select(-customerID)

# train/test split; 75%/25%

# setting the seed for reproducibility

set.seed(5)

inTrain <- createDataPartition(y = df$Churn, p=0.75, list=FALSE)

train <- df[inTrain,]

test <- df[-inTrain,]

# fitting the model

fit <- glm(Churn~., data=train, family=binomial)

# making predictions

churn.probs <- predict(fit, test, type="response")

head(churn.probs)

## 1 2 3 4 5 6

## 0.32756804 0.77302887 0.56592677 0.20112771 0.05152568 0.15085976

# converting probabilities to classes; "Yes" or "No"

contrasts(df$Churn) # Yes = 1, No = 0

## Yes

## No 0

## Yes 1

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > 0.5] = "Yes"

confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1165 205

## Yes 128 262

##

## Accuracy : 0.8108

## 95% CI : (0.7917, 0.8288)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 4.239e-14

##

## Kappa : 0.4877

## Mcnemar's Test P-Value : 3.117e-05

##

## Sensitivity : 0.5610

## Specificity : 0.9010

## Pos Pred Value : 0.6718

## Neg Pred Value : 0.8504

## Prevalence : 0.2653

## Detection Rate : 0.1489

## Detection Prevalence : 0.2216

## Balanced Accuracy : 0.7310

##

## 'Positive' Class : Yes

##

#---Membaca Confution Matrix dan Statistics

Didapat Akurasi 81%.

Beberapa metrik lain yang dilaporkan adalah ukuran yang lebih baik, karena kelas respons sedikit tidak seimbang (~ 73% = Tidak, ~ 27% = Ya).

Sensitivitas, yang merupakan ukuran dari tingkat positif sejati (TP / (TP + FN)), adalah 56%.

Spesifisitas, atau tingkat negatif sejati (TN / (TN + FP)), adalah 90%.

Ini memberitahu kita bahwa model kita adalah 56% akurat dalam mengidentifikasi dengan benar positif yang sebenarnya Dengan kata lain, model ini telah mengidentifikasi 56% orang yang benar-benar churn(berhenti).

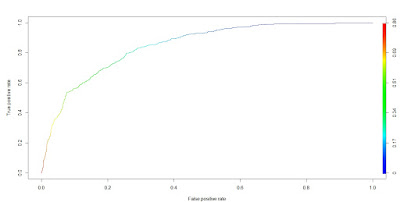

Metrik lain yang bermanfaat adalah AUC. Ini adalah area di bawah kurva karakteristik operasi penerima (ROC). Secara default, saya menggunakan 0,5 sebagai ambang untuk membuat prediksi dari probabilitas. Sering kali ini tidak optimal, sehingga kurva ROC dibuat untuk memplot angka positif sejati vs tingkat positif palsu (y = TP, x = FP).

AUC dapat mengambil nilai apa pun antara 0 dan 1. Model dasar yang digunakan adalah prediktor acak, yang memiliki nilai 0,5. Semakin jauh nilai ini dari 0,5, semakin baik, dengan model yang ideal memiliki AUC 1.

##---Lihat kurva ROC dan AUC

library(ROCR)

# need to create prediction object from ROCR

pr <- prediction(churn.probs, test$Churn)

#--- Fitting the model

fit <- glm(Churn~SeniorCitizen + tenure + MultipleLines + InternetService + StreamingTV + Contract + PaperlessBilling + PaymentMethod + TotalCharges

, data=train,

family=binomial)

#--- making predictions

churn.probs <- predict(fit, test, type="response")

head(churn.probs)

## 1 2 3 4 5 6

## 0.36592800 0.74222067 0.61241105 0.25060677 0.04409168 0.19736195

# converting probabilities to classes; "Yes" or "No"

contrasts(df$Churn) # Yes = 1, No = 0

# converting probabilities to classes; "Yes" or "No"

contrasts(df$Churn) # Yes = 1, No = 0

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > 0.5] = "Yes"

confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1157 209

## Yes 136 258

##

## Accuracy : 0.804

## 95% CI : (0.7846, 0.8223)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 6.588e-12

##

## Kappa : 0.4708

## Mcnemar's Test P-Value : 0.000106

##

## Sensitivity : 0.5525

## Specificity : 0.8948

## Pos Pred Value : 0.6548

## Neg Pred Value : 0.8470

## Prevalence : 0.2653

## Detection Rate : 0.1466

## Detection Prevalence : 0.2239

## Balanced Accuracy : 0.7236

##

## 'Positive' Class : Yes

##

#The accuracy tetap 80%, the true positive rate (55%) and true negative rate (89%) tetap.

#---Now lets also take a look at the ROC curve, and AUC.

library(ROCR)

# need to create prediction object from ROCR

pr <- prediction(churn.probs, test$Churn)

#--- Plotting ROC curve

prf <- performance(pr, measure = "tpr", x.measure = "fpr")

plot(prf)

#--- AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.8454775

Nilai AUC for this model is 0.85, sama dengan model semula. Selanjutnya akan dicoba prediksi model Random forest

#---Random Forest

#Saya akan coba model ini konon lebih baik dari Logistic Regression untuk non linear Model.

#Random forest terdiri dari beberapa single dec tree, biasanya low bias tapi high variance.Karena high variance #cenderung overfit

library(randomForest)

churn.rf = randomForest(Churn~., data = train, importance = T)

#churn.rf

##

## Call:

## randomForest(formula = Churn ~ ., data = train, importance = T)

## Type of random forest: classification

## Number of trees: 500

## No. of variables tried at each split: 4

##

## OOB estimate of error rate: 20.29%

## Confusion matrix:

## No Yes class.error

## No 3502 379 0.09765524

## Yes 693 709 0.49429387

churn.predict.prob <- predict(churn.rf, test, type="prob")

churn.predict <- predict(churn.rf, test)

confusionMatrix(churn.predict, test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1148 225

## Yes 145 242

##

## Accuracy : 0.7898

## 95% CI : (0.77, 0.8086)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 4.791e-08

##

## Kappa : 0.4296

## Mcnemar's Test P-Value : 4.008e-05

##

## Sensitivity : 0.5182

## Specificity : 0.8879

## Pos Pred Value : 0.6253

## Neg Pred Value : 0.8361

## Prevalence : 0.2653

## Detection Rate : 0.1375

## Detection Prevalence : 0.2199

## Balanced Accuracy : 0.7030

##

## 'Positive' Class : Yes

##

#Nilai accuracy 79%, the true positive rate is 52%, and the true negative rate is 89%.Tidak lebih baik dari #logistic regression, selanjutnya lihat curva auc

library(ROCR)

# need to create prediction object from ROCR

pr <- prediction(churn.predict.prob[,2], test$Churn)

# plotting ROC curve

prf <- performance(pr, measure = "tpr", x.measure = "fpr")

plot(prf)

#---AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.837611

The AUC is 0.84, mendekati model logistic regression. Lihat variable importan di random forest model.

importance(churn.rf)

## No Yes MeanDecreaseAccuracy

## gender -0.2787850 0.1640010 -0.1070151

## SeniorCitizen 12.5137057 1.0032629 11.7955391

## Partner 0.6681314 -0.2110722 0.4006978

## Dependents -3.5717006 8.8013663 3.5375971

## tenure 26.7636624 29.5199677 48.7934452

## PhoneService 0.4547738 7.2310984 5.0965859

## MultipleLines 3.7343699 7.4371935 8.0811306

## InternetService 15.2651738 21.6388724 23.4215171

## OnlineSecurity 10.3995975 21.7833665 19.4301151

## OnlineBackup 8.1641774 11.0629272 12.7885313

## DeviceProtection 11.1750628 -4.1130469 9.5983045

## TechSupport 9.4310594 29.4754798 21.5097751

## StreamingTV 9.9877053 0.2214763 9.3273850

## StreamingMovies 12.1806516 0.7345354 11.6250767

## Contract 7.1397800 29.7611523 32.5802482

## PaperlessBilling -2.6548883 16.6188362 9.5044340

## PaymentMethod 4.8829898 11.7248708 11.8284691

## MonthlyCharges 17.6834891 16.0277495 28.3442378

## TotalCharges 28.2673591 16.8527671 41.1659411

## MeanDecreaseGini

## gender 43.348639

## SeniorCitizen 34.916600

## Partner 37.067003

## Dependents 31.846637

## tenure 304.225536

## PhoneService 8.170746

## MultipleLines 41.031725

## InternetService 73.350708

## OnlineSecurity 82.194235

## OnlineBackup 45.627216

## DeviceProtection 41.936300

## TechSupport 79.967321

## StreamingTV 34.535218

## StreamingMovies 35.813841

## Contract 146.945538

## PaperlessBilling 42.636922

## PaymentMethod 110.136025

## MonthlyCharges 298.938071

## TotalCharges 339.656579

varImpPlot(churn.rf)

#Some of the features that were important in the logistic regression model, such as tenure and TotalCharges, are #also important to the random forest model. Other features like TechSupport and MonthlyCharges were not significant #in the logistic regression model, but are ranked fairly high for the random forest model.

#---Parameter Tuning

# changing the number of variables to try at each split

# mtry = 8, 12, 16, 20

#--- Fitting the model

churn.rf = randomForest(Churn~., data = train, mtry = 20, importance = T)

churn.predict.prob <- predict(churn.rf, test, type="prob")

churn.predict <- predict(churn.rf, test)

confusionMatrix(churn.predict, test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1144 219

## Yes 149 248

##

## Accuracy : 0.7909

## 95% CI : (0.7711, 0.8097)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 2.544e-08

##

## Kappa : 0.4367

## Mcnemar's Test P-Value : 0.0003221

##

## Sensitivity : 0.5310

## Specificity : 0.8848

## Pos Pred Value : 0.6247

## Neg Pred Value : 0.8393

## Prevalence : 0.2653

## Detection Rate : 0.1409

## Detection Prevalence : 0.2256

## Balanced Accuracy : 0.7079

##

## 'Positive' Class : Yes

##

#--- need to create prediction object from ROCR

pr <- prediction(churn.predict.prob[,2], test$Churn)

#--- AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.8262105

# mtry = 8; AUC = 0.83

# mtry = 12; AUC = 0.83

# mtry = 16; AUC = 0.83

# mtry = 20; AUC = 0.82

#Changing the number of variables to try didn’t improve the model. The resulting AUC decreased slightly to 0.83. #Now I’ll try changing the number of trees.

# changing the number of trees

# ntree = 25, 250, 500, 750

#--- Fitting the model

churn.rf = randomForest(Churn~., data = train, ntree = 750, importance = T)

churn.predict.prob <- predict(churn.rf, test, type="prob")

churn.predict <- predict(churn.rf, test)

confusionMatrix(churn.predict, test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1150 224

## Yes 143 243

##

## Accuracy : 0.7915

## 95% CI : (0.7717, 0.8102)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 1.844e-08

##

## Kappa : 0.4338

## Mcnemar's Test P-Value : 2.967e-05

##

## Sensitivity : 0.5203

## Specificity : 0.8894

## Pos Pred Value : 0.6295

## Neg Pred Value : 0.8370

## Prevalence : 0.2653

## Detection Rate : 0.1381

## Detection Prevalence : 0.2193

## Balanced Accuracy : 0.7049

##

## 'Positive' Class : Yes

##

#--- need to create prediction object from ROCR

pr <- prediction(churn.predict.prob[,2], test$Churn)

#--- AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.8392315

# ntree = 25; AUC = 0.83

# ntree = 250; AUC = 0.84

# ntree = 500; AUC = 0.84

# ntree = 750; AUC = 0.84

#Tidak memperbaiki model juga, so I’ll stick with the original model (mtry = 4, ntree = 500).

#Finally, I’ll use K-fold cross-validation with 10 folds, repeated 3 times, to compare the models.

#---K-fold Cross Validation

Menggunakan 10-fold cross validation, 3 kali perulangan.

#k-fold cross val in caret

set.seed(10)

#--- train control

fitControl <- trainControl(## 10-fold CV

method = "repeatedcv",

number = 10,

## repeated 3 times

repeats = 3,

classProbs = TRUE,

summaryFunction = twoClassSummary)

#--- Logistic Regression Model

logreg <- train(Churn ~., df,

method = "glm",

family = "binomial",

trControl = fitControl,

metric = "ROC")

## ROC Sens Spec

## 0.8453004 0.8960842 0.5519886

#--- Random Forest Model

rf <- train(Churn ~., df,

method = "rf",

trControl = fitControl,

metric = "ROC")

## mtry ROC Sens Spec

## 2 0.8321455 0.9476230 0.3550946

## 16 0.8256092 0.8929878 0.4999195

## 30 0.8193891 0.8894460 0.4986708

#ROC glm 0.84, ROC rf 0.83

#Jadi Logistic Regression terbukti disini lebih baik daripada Random Forest.

#---Evaluasi Cost

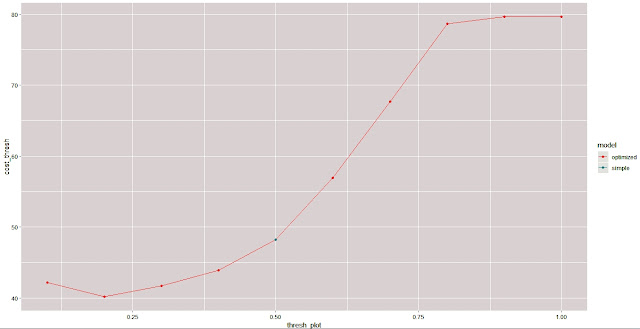

Apa akibatnya bagi bisnis, bila memakai model(Optimation Model) dan tanpa memakai model.

Untuk memulai, saya akan membuat beberapa asumsi terkait biaya. Hasil searching, sepertinya biaya akuisisi pelanggan di industri telekomunikasi adalah sekitar $ 300.

Hasil serching sepertinya biaya akuisisi pelanggan kira-kira lima kali lebih tinggi daripada biaya retensi pelanggan. Saya akan berasumsi bahwa biaya retensi pelanggan saya adalah $ 60. Biaya-biaya ini akan dikeluarkan selama false positives (memprediksi pelanggan akan berubah ketika mereka benar-benar puas), dan true positives (memprediksi pelanggan benar2 tdk puas). Tidak akan ada biaya yang dikeluarkan untuk prediksi negatif yang sebenarnya (benar memprediksi pelanggan senang).

Persamaan cost nya sbb:

cost = FN(300) + TP(60) + FP(60) + TN(0)

Digunakan model logistic regression karena disini hasilnya lebih akurat daripada model Random forest

#--- Fitting the logistic regression model

fit <- glm(Churn~., data=train, family=binomial)

#--- Making predictions

churn.probs <- predict(fit, test, type="response")

head(churn.probs)

#--- Converting probabilities to classes; "Yes" or "No"

contrasts(df$Churn) # Yes = 1, No = 0

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > 0.5] = "Yes"

x <- confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

#--- cost as a function of threshold

thresh <- seq(0.1,1.0, length = 10)

cost = rep(0,length(thresh))

for (i in 1:length(thresh)){

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > thresh[i]] = "Yes"

x <- confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

TN <- x$table[1]/1760

FP <- x$table[2]/1760

FN <- x$table[3]/1760

TP <- x$table[4]/1760

cost[i] = FN*300 + TP*60 + FP*60 + TN*0

}

min(cost)

[1] 40.15909

#--- Simple model - assume threshold is 0.5

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > 0.5] = "Yes"

x <- confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

TN <- x$table[1]/1760

FP <- x$table[2]/1760

FN <- x$table[3]/1760

TP <- x$table[4]/1760

cost_simple = FN*300 + TP*60 + FP*60 + TN*0

cost_simple

[1] 48.20455

#--- Putting results in a dataframe for plotting

dat <- data.frame(

model = c(rep("optimized",10),"simple"),

cost_thresh = c(cost,cost_simple),

thresh_plot = c(thresh,0.5)

)

ggplot(dat, aes(x = thresh_plot, y = cost_thresh, group = model, colour = model)) +

geom_line() +

geom_point()

#--- cost savings of optimized model (threshold = 0.2) compared to baseline model (threshold = 0.5)

savings_per_customer = cost_simple - min(cost)

total_savings = 500000*savings_per_customer

## total savings: 4107955

#---Kesimpulan

Jika menggunakan optimasi model diatas akan didapat penghematan cost (savings_per_customer = cost_simple - min(cost)=8.045455).

Diasumsikan perusahaan ini punya pelanggan 500,000, maka akan ada penghematan 500000*savings_per_customer = 4107955.

Contoh analisa di atas menggambarkan bahwa optimizing a machine learning model dijamin keakurataanya, dan salah satu alat yang diperlukan dalam bisnis modern di era digital saat ini.

#---Referensi:

https://datascienceplus.com/perform-logistic-regression-in-r/

https://www.datacamp.com/community/tutorials/logistic-regression-R

https://www.displayr.com/how-to-interpret-logistic-regression-coefficients/

https://www.tutorialspoint.com/r/r_random_forest.htm

https://educationalresearchtechniques.com/2017/05/10/random-forest-regression-trees-in-r/

Melanjutkan pembicaraan Exploratory Data Analysis di posting sebelumnya, di sini akan dibahas 2 model regresi, yaitu Logistic Regression dan Random Forest Regression. 2 Model ini banyak dipakai dalam bisnis ecommerce, juga bisnis sejenis lainnya, dimana banyak variable yang saling terkait, sehingga sulit bagi manajemen traditional untuk sekedar menebak variable mana yang paling berpengaruh terhadap kelangsungan bisnisnya.

|

| USA Map |

Karena orientasi bisnis adalah profit atas ROI(Rate of Investmen), maka perlu dicari model yang tepat, agar manajemen tidak meraba raba bahkan menebak variable mana yang paling berpengaruh, karena hal tersebut akan beresiko tinggi dan mengeluarkan banyak biaya jika salah dalam mengeksekusi kebijakan.

Di akhir artikel saya akan menyajikan skenario bisnis hipotetis di mana dengan menggunakan model yang tepat dapat memproyeksikan penghematan tahunan sebesar $4juta dalam biaya retensi pelanggan. Penghematan biaya ini dicapai dengan mengoptimalkan ambang model Logistic Regression. Di sini dibuat beberapa asumsi dasar tentang akuisisi pelanggan dan biaya retensi pelanggan, untuk model perusahaan telekomunikasi.

Akan diuji terlebih dahulu antara Model Logistic Regression dan Model Random Forest

dengan test validasi dan Kfold validation untuk menghindari over fitting, berikut ulasannya dalam script R:

#---Logistic Regression

library(caret)

library(tidyverse)

library(miscset)

#---Variable (-customerID) dikeluarkan

df <- read.csv("d:/churn/WA_Fn-UseC_-Telco-Customer-Churn.csv")

df <- df %>% select(-customerID)

# train/test split; 75%/25%

# setting the seed for reproducibility

set.seed(5)

inTrain <- createDataPartition(y = df$Churn, p=0.75, list=FALSE)

train <- df[inTrain,]

test <- df[-inTrain,]

# fitting the model

fit <- glm(Churn~., data=train, family=binomial)

# making predictions

churn.probs <- predict(fit, test, type="response")

head(churn.probs)

## 1 2 3 4 5 6

## 0.32756804 0.77302887 0.56592677 0.20112771 0.05152568 0.15085976

# converting probabilities to classes; "Yes" or "No"

contrasts(df$Churn) # Yes = 1, No = 0

## Yes

## No 0

## Yes 1

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > 0.5] = "Yes"

confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1165 205

## Yes 128 262

##

## Accuracy : 0.8108

## 95% CI : (0.7917, 0.8288)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 4.239e-14

##

## Kappa : 0.4877

## Mcnemar's Test P-Value : 3.117e-05

##

## Sensitivity : 0.5610

## Specificity : 0.9010

## Pos Pred Value : 0.6718

## Neg Pred Value : 0.8504

## Prevalence : 0.2653

## Detection Rate : 0.1489

## Detection Prevalence : 0.2216

## Balanced Accuracy : 0.7310

##

## 'Positive' Class : Yes

##

#---Membaca Confution Matrix dan Statistics

Didapat Akurasi 81%.

Beberapa metrik lain yang dilaporkan adalah ukuran yang lebih baik, karena kelas respons sedikit tidak seimbang (~ 73% = Tidak, ~ 27% = Ya).

Sensitivitas, yang merupakan ukuran dari tingkat positif sejati (TP / (TP + FN)), adalah 56%.

Spesifisitas, atau tingkat negatif sejati (TN / (TN + FP)), adalah 90%.

Ini memberitahu kita bahwa model kita adalah 56% akurat dalam mengidentifikasi dengan benar positif yang sebenarnya Dengan kata lain, model ini telah mengidentifikasi 56% orang yang benar-benar churn(berhenti).

Metrik lain yang bermanfaat adalah AUC. Ini adalah area di bawah kurva karakteristik operasi penerima (ROC). Secara default, saya menggunakan 0,5 sebagai ambang untuk membuat prediksi dari probabilitas. Sering kali ini tidak optimal, sehingga kurva ROC dibuat untuk memplot angka positif sejati vs tingkat positif palsu (y = TP, x = FP).

AUC dapat mengambil nilai apa pun antara 0 dan 1. Model dasar yang digunakan adalah prediktor acak, yang memiliki nilai 0,5. Semakin jauh nilai ini dari 0,5, semakin baik, dengan model yang ideal memiliki AUC 1.

|

| ROC |

##---Lihat kurva ROC dan AUC

library(ROCR)

# need to create prediction object from ROCR

pr <- prediction(churn.probs, test$Churn)

# plotting ROC curve

prf <- performance(pr, measure = "tpr", x.measure = "fpr")

par(mar=c(1,1,1,1))

plot(prf) #ok

# AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.8481338

AUC 0.85 > 0.5 berarti baik

#---Feature Selection

#Improving this model , we can take a look at the summary of the fit and identify which features are significant (p-value < 0.05).

# summary of the model

summary(fit)

fit <- glm(Churn~SeniorCitizen + tenure + MultipleLines + InternetService + StreamingTV + Contract + PaperlessBilling + PaymentMethod + TotalCharges

, data=train,

family=binomial)

#--- making predictions

churn.probs <- predict(fit, test, type="response")

head(churn.probs)

## 1 2 3 4 5 6

## 0.36592800 0.74222067 0.61241105 0.25060677 0.04409168 0.19736195

# converting probabilities to classes; "Yes" or "No"

contrasts(df$Churn) # Yes = 1, No = 0

# converting probabilities to classes; "Yes" or "No"

contrasts(df$Churn) # Yes = 1, No = 0

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > 0.5] = "Yes"

confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1157 209

## Yes 136 258

##

## Accuracy : 0.804

## 95% CI : (0.7846, 0.8223)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 6.588e-12

##

## Kappa : 0.4708

## Mcnemar's Test P-Value : 0.000106

##

## Sensitivity : 0.5525

## Specificity : 0.8948

## Pos Pred Value : 0.6548

## Neg Pred Value : 0.8470

## Prevalence : 0.2653

## Detection Rate : 0.1466

## Detection Prevalence : 0.2239

## Balanced Accuracy : 0.7236

##

## 'Positive' Class : Yes

##

#The accuracy tetap 80%, the true positive rate (55%) and true negative rate (89%) tetap.

#---Now lets also take a look at the ROC curve, and AUC.

library(ROCR)

# need to create prediction object from ROCR

pr <- prediction(churn.probs, test$Churn)

#--- Plotting ROC curve

prf <- performance(pr, measure = "tpr", x.measure = "fpr")

plot(prf)

#--- AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.8454775

Nilai AUC for this model is 0.85, sama dengan model semula. Selanjutnya akan dicoba prediksi model Random forest

#---Random Forest

#Saya akan coba model ini konon lebih baik dari Logistic Regression untuk non linear Model.

#Random forest terdiri dari beberapa single dec tree, biasanya low bias tapi high variance.Karena high variance #cenderung overfit

library(randomForest)

churn.rf = randomForest(Churn~., data = train, importance = T)

#churn.rf

##

## Call:

## randomForest(formula = Churn ~ ., data = train, importance = T)

## Type of random forest: classification

## Number of trees: 500

## No. of variables tried at each split: 4

##

## OOB estimate of error rate: 20.29%

## Confusion matrix:

## No Yes class.error

## No 3502 379 0.09765524

## Yes 693 709 0.49429387

churn.predict.prob <- predict(churn.rf, test, type="prob")

churn.predict <- predict(churn.rf, test)

confusionMatrix(churn.predict, test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1148 225

## Yes 145 242

##

## Accuracy : 0.7898

## 95% CI : (0.77, 0.8086)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 4.791e-08

##

## Kappa : 0.4296

## Mcnemar's Test P-Value : 4.008e-05

##

## Sensitivity : 0.5182

## Specificity : 0.8879

## Pos Pred Value : 0.6253

## Neg Pred Value : 0.8361

## Prevalence : 0.2653

## Detection Rate : 0.1375

## Detection Prevalence : 0.2199

## Balanced Accuracy : 0.7030

##

## 'Positive' Class : Yes

##

#Nilai accuracy 79%, the true positive rate is 52%, and the true negative rate is 89%.Tidak lebih baik dari #logistic regression, selanjutnya lihat curva auc

library(ROCR)

# need to create prediction object from ROCR

pr <- prediction(churn.predict.prob[,2], test$Churn)

# plotting ROC curve

prf <- performance(pr, measure = "tpr", x.measure = "fpr")

plot(prf)

#---AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.837611

The AUC is 0.84, mendekati model logistic regression. Lihat variable importan di random forest model.

importance(churn.rf)

## No Yes MeanDecreaseAccuracy

## gender -0.2787850 0.1640010 -0.1070151

## SeniorCitizen 12.5137057 1.0032629 11.7955391

## Partner 0.6681314 -0.2110722 0.4006978

## Dependents -3.5717006 8.8013663 3.5375971

## tenure 26.7636624 29.5199677 48.7934452

## PhoneService 0.4547738 7.2310984 5.0965859

## MultipleLines 3.7343699 7.4371935 8.0811306

## InternetService 15.2651738 21.6388724 23.4215171

## OnlineSecurity 10.3995975 21.7833665 19.4301151

## OnlineBackup 8.1641774 11.0629272 12.7885313

## DeviceProtection 11.1750628 -4.1130469 9.5983045

## TechSupport 9.4310594 29.4754798 21.5097751

## StreamingTV 9.9877053 0.2214763 9.3273850

## StreamingMovies 12.1806516 0.7345354 11.6250767

## Contract 7.1397800 29.7611523 32.5802482

## PaperlessBilling -2.6548883 16.6188362 9.5044340

## PaymentMethod 4.8829898 11.7248708 11.8284691

## MonthlyCharges 17.6834891 16.0277495 28.3442378

## TotalCharges 28.2673591 16.8527671 41.1659411

## MeanDecreaseGini

## gender 43.348639

## SeniorCitizen 34.916600

## Partner 37.067003

## Dependents 31.846637

## tenure 304.225536

## PhoneService 8.170746

## MultipleLines 41.031725

## InternetService 73.350708

## OnlineSecurity 82.194235

## OnlineBackup 45.627216

## DeviceProtection 41.936300

## TechSupport 79.967321

## StreamingTV 34.535218

## StreamingMovies 35.813841

## Contract 146.945538

## PaperlessBilling 42.636922

## PaymentMethod 110.136025

## MonthlyCharges 298.938071

## TotalCharges 339.656579

varImpPlot(churn.rf)

#Some of the features that were important in the logistic regression model, such as tenure and TotalCharges, are #also important to the random forest model. Other features like TechSupport and MonthlyCharges were not significant #in the logistic regression model, but are ranked fairly high for the random forest model.

#---Parameter Tuning

# changing the number of variables to try at each split

# mtry = 8, 12, 16, 20

#--- Fitting the model

churn.rf = randomForest(Churn~., data = train, mtry = 20, importance = T)

churn.predict.prob <- predict(churn.rf, test, type="prob")

churn.predict <- predict(churn.rf, test)

confusionMatrix(churn.predict, test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1144 219

## Yes 149 248

##

## Accuracy : 0.7909

## 95% CI : (0.7711, 0.8097)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 2.544e-08

##

## Kappa : 0.4367

## Mcnemar's Test P-Value : 0.0003221

##

## Sensitivity : 0.5310

## Specificity : 0.8848

## Pos Pred Value : 0.6247

## Neg Pred Value : 0.8393

## Prevalence : 0.2653

## Detection Rate : 0.1409

## Detection Prevalence : 0.2256

## Balanced Accuracy : 0.7079

##

## 'Positive' Class : Yes

##

#--- need to create prediction object from ROCR

pr <- prediction(churn.predict.prob[,2], test$Churn)

#--- AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.8262105

# mtry = 8; AUC = 0.83

# mtry = 12; AUC = 0.83

# mtry = 16; AUC = 0.83

# mtry = 20; AUC = 0.82

#Changing the number of variables to try didn’t improve the model. The resulting AUC decreased slightly to 0.83. #Now I’ll try changing the number of trees.

# changing the number of trees

# ntree = 25, 250, 500, 750

#--- Fitting the model

churn.rf = randomForest(Churn~., data = train, ntree = 750, importance = T)

churn.predict.prob <- predict(churn.rf, test, type="prob")

churn.predict <- predict(churn.rf, test)

confusionMatrix(churn.predict, test$Churn, positive = "Yes")

## Confusion Matrix and Statistics

##

## Reference

## Prediction No Yes

## No 1150 224

## Yes 143 243

##

## Accuracy : 0.7915

## 95% CI : (0.7717, 0.8102)

## No Information Rate : 0.7347

## P-Value [Acc > NIR] : 1.844e-08

##

## Kappa : 0.4338

## Mcnemar's Test P-Value : 2.967e-05

##

## Sensitivity : 0.5203

## Specificity : 0.8894

## Pos Pred Value : 0.6295

## Neg Pred Value : 0.8370

## Prevalence : 0.2653

## Detection Rate : 0.1381

## Detection Prevalence : 0.2193

## Balanced Accuracy : 0.7049

##

## 'Positive' Class : Yes

##

#--- need to create prediction object from ROCR

pr <- prediction(churn.predict.prob[,2], test$Churn)

#--- AUC value

auc <- performance(pr, measure = "auc")

auc <- auc@y.values[[1]]

auc

## [1] 0.8392315

# ntree = 25; AUC = 0.83

# ntree = 250; AUC = 0.84

# ntree = 500; AUC = 0.84

# ntree = 750; AUC = 0.84

#Tidak memperbaiki model juga, so I’ll stick with the original model (mtry = 4, ntree = 500).

#Finally, I’ll use K-fold cross-validation with 10 folds, repeated 3 times, to compare the models.

#---K-fold Cross Validation

Menggunakan 10-fold cross validation, 3 kali perulangan.

#k-fold cross val in caret

set.seed(10)

#--- train control

fitControl <- trainControl(## 10-fold CV

method = "repeatedcv",

number = 10,

## repeated 3 times

repeats = 3,

classProbs = TRUE,

summaryFunction = twoClassSummary)

#--- Logistic Regression Model

logreg <- train(Churn ~., df,

method = "glm",

family = "binomial",

trControl = fitControl,

metric = "ROC")

## ROC Sens Spec

## 0.8453004 0.8960842 0.5519886

#--- Random Forest Model

rf <- train(Churn ~., df,

method = "rf",

trControl = fitControl,

metric = "ROC")

## mtry ROC Sens Spec

## 2 0.8321455 0.9476230 0.3550946

## 16 0.8256092 0.8929878 0.4999195

## 30 0.8193891 0.8894460 0.4986708

#ROC glm 0.84, ROC rf 0.83

#Jadi Logistic Regression terbukti disini lebih baik daripada Random Forest.

#---Evaluasi Cost

|

| Optimation Model |

Apa akibatnya bagi bisnis, bila memakai model(Optimation Model) dan tanpa memakai model.

Untuk memulai, saya akan membuat beberapa asumsi terkait biaya. Hasil searching, sepertinya biaya akuisisi pelanggan di industri telekomunikasi adalah sekitar $ 300.

Hasil serching sepertinya biaya akuisisi pelanggan kira-kira lima kali lebih tinggi daripada biaya retensi pelanggan. Saya akan berasumsi bahwa biaya retensi pelanggan saya adalah $ 60. Biaya-biaya ini akan dikeluarkan selama false positives (memprediksi pelanggan akan berubah ketika mereka benar-benar puas), dan true positives (memprediksi pelanggan benar2 tdk puas). Tidak akan ada biaya yang dikeluarkan untuk prediksi negatif yang sebenarnya (benar memprediksi pelanggan senang).

Persamaan cost nya sbb:

cost = FN(300) + TP(60) + FP(60) + TN(0)

Digunakan model logistic regression karena disini hasilnya lebih akurat daripada model Random forest

#--- Fitting the logistic regression model

fit <- glm(Churn~., data=train, family=binomial)

#--- Making predictions

churn.probs <- predict(fit, test, type="response")

head(churn.probs)

#--- Converting probabilities to classes; "Yes" or "No"

contrasts(df$Churn) # Yes = 1, No = 0

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > 0.5] = "Yes"

x <- confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

#--- cost as a function of threshold

thresh <- seq(0.1,1.0, length = 10)

cost = rep(0,length(thresh))

for (i in 1:length(thresh)){

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > thresh[i]] = "Yes"

x <- confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

TN <- x$table[1]/1760

FP <- x$table[2]/1760

FN <- x$table[3]/1760

TP <- x$table[4]/1760

cost[i] = FN*300 + TP*60 + FP*60 + TN*0

}

min(cost)

[1] 40.15909

#--- Simple model - assume threshold is 0.5

glm.pred = rep("No", length(churn.probs))

glm.pred[churn.probs > 0.5] = "Yes"

x <- confusionMatrix(factor(glm.pred), test$Churn, positive = "Yes")

TN <- x$table[1]/1760

FP <- x$table[2]/1760

FN <- x$table[3]/1760

TP <- x$table[4]/1760

cost_simple = FN*300 + TP*60 + FP*60 + TN*0

cost_simple

[1] 48.20455

#--- Putting results in a dataframe for plotting

dat <- data.frame(

model = c(rep("optimized",10),"simple"),

cost_thresh = c(cost,cost_simple),

thresh_plot = c(thresh,0.5)

)

ggplot(dat, aes(x = thresh_plot, y = cost_thresh, group = model, colour = model)) +

geom_line() +

geom_point()

#--- cost savings of optimized model (threshold = 0.2) compared to baseline model (threshold = 0.5)

savings_per_customer = cost_simple - min(cost)

total_savings = 500000*savings_per_customer

## total savings: 4107955

#---Kesimpulan

Jika menggunakan optimasi model diatas akan didapat penghematan cost (savings_per_customer = cost_simple - min(cost)=8.045455).

Diasumsikan perusahaan ini punya pelanggan 500,000, maka akan ada penghematan 500000*savings_per_customer = 4107955.

Contoh analisa di atas menggambarkan bahwa optimizing a machine learning model dijamin keakurataanya, dan salah satu alat yang diperlukan dalam bisnis modern di era digital saat ini.

#---Referensi:

https://datascienceplus.com/perform-logistic-regression-in-r/

https://www.datacamp.com/community/tutorials/logistic-regression-R

https://www.displayr.com/how-to-interpret-logistic-regression-coefficients/

https://www.tutorialspoint.com/r/r_random_forest.htm

https://educationalresearchtechniques.com/2017/05/10/random-forest-regression-trees-in-r/

Tidak ada komentar:

Posting Komentar